🎄12 Days of HPC

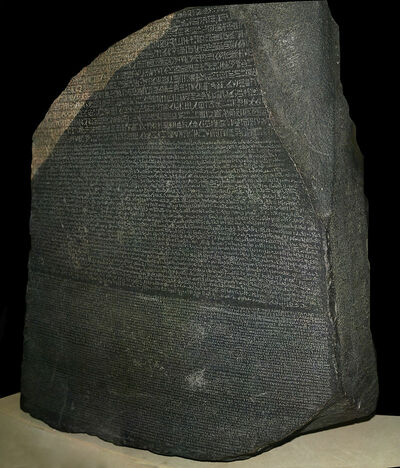

Mind your language

During the month of December we’re featuring blog posts from researchers from across the University of Leeds showcasing the fantastic work they do using our High Performance Computing system. Follow us @RC_at_Leeds to keep up to date with our 12 days of HPC blog series.

What’s your name?

Serge Sharoff

What department do you work in?

Centre for Translation Studies, School of Languages, Cultures and Societies

What research question are you trying to answer?

The field of Digital Humanities is fairly broad. Our research questions are related to using AI tools for getting anything which is expressed through the medium of language, for example:

- What is the balance of information, argumentation and propaganda in this political speech? What is the balance of such texts across the entire Web?

- How difficult is this text for a language learner?

- What is the likely age of the author of this blog post?

- Judging by mentions in social media, is this COVID-19 mitigation measure commonly accepted or rejected by the public? What are the conditions for accepting it?

- Can we improve Machine Translation for distant language pairs, such as Chinese-English?

- Can we predict that this Machine Translation output is crap?

How does HPC help your research?

Any language processing task requires considerable resources, as statistically speaking language consists of a large number of rare events, so we need billions of datapoints to capture any meaningful statistics about them. Also modern methods are based on Deep Learning, and this requires GPUs to train neural models. This makes HPC indispensable for this kind of research.

What is the potential impact of your research?

Language is the backbone of human communication, so any progress in understanding language leads to improving communication in the society. Two really big examples of how Language Technology helps are Internet search and Machine Translation.

In your personal opinion what’s the coolest thing about your research?

Language is a prime example of Big Data. Every day an average student reads or hears about 30,000 words, which might come from textbooks, research papers, manuals, news, Facebook feeds, WhatsApp chats, watching TV, etc. This makes it more than 10 million words per year for each of us, while each of us gets this from very different sources. On the other hand, people know hundreds of thousands of words, but they do not use each of them often. For example, the word `unicyclist’ occurs once per 23 million words. With the rate of experiencing 10 million words per year, we’re likely to encounter one example of this word every two-three years (unless you belong to a community of unicyclists). This makes it very difficult to build its model, which can predict which words are more likely to come before or after it. This is one of the challenges of linguistic research, but this makes it more exciting.

What’s your favourite christmas film?

Fanny and Alexander by Bergman, the choice looks unusual, but the film is beautiful and uplifting.